Real-Time Object Tracking : From Fruits to Plates

How to leverage infrastructure platforms, such as Roboflow, for rapid machine learning development with minimal training data. See https://github.com/Kelvinayala10/license-plate-recognition for more.

Figure 1 : Real-time shopping cart demonstration setup in my cubicleReal-time object tracking and classification is one of the many applications that emerged from the advent of machine learning in the modern era. Commonly referred to as computer vision , you would be pressed to find areas in your life where this new technology is not being introduced. In healthcare, computer vision is being used to deduce diseases quickly from imaging data of a patient in order to begin treatment as soon as possible and thus drastically improving the odds of patient recovery. In energy, it is being used to monitor hyper-spectral cameras for gas and oil leaks to promptly resolve them before they endanger an area. Lastly, automakers continue to embed computer vision into their product offerings ranging from basic ADAS (Advanced Driver Assitance Systems) functionalities such as lane departure warnings to the long-shot aspirations of Tesla in creating fully self-driving L5 vehicles.

Wherever you turn to look in society today, you will find someone trying to use machine learning to interact and deduce information from it. During my time as a machine learning engineer at Texas Instruments this presented an opportunity to position TI products in new markets outside of the AV (Automated Vehicle) and industrial robotics automation space. Specifically, there was an effort to integrate the latest AM68x processor line into retail shopping applications. The AM68x product family offered 8 TOPS of performance for 1-8 cameras thus allowing for a myriad of applications in machine vision and smart traffic. Retail automation was deduced to be a logically sound realm that this specially tuned real-time embedded machine learning device would excel in and one where overall development could follow closely to existing software flows/processes.

Figure 2 : Real-time shopping cart demonstration setup videoHowever, it became quickly apparent that there would be many variables that would be introduced in creating a retail checkout automation system. Even though similar efforts had been conducted for developing ADAS software offerings to detect vehicles as shown in the demonstration below, it became readily evident that acquiring data for each type of product in a retail setting such as a grocery store would be much more difficult in comparison to acquiring vehicular training data.

Where as vehicular data could be easily categorized into a single class (vehicle) and then broken down with several labels such as car/truck, typical retail products could not be so easily broken down into such categories easily. For example, what features separate a box of cereal from a box of popcorn ? How detailed must a frame of data be for such a system to be able to distinguish such details at scale and quickly for ease-of-use at customer level?

Figure 3 : Edge AI Automotive Object Detection Model Demonstration Figure 4 : Edge AI Automotive Semantic Segmentation Model DemonstrationBefore we continue to explore the answers to these questions, let me begin by doing some general housekeeping work in understanding the technicalities of these deep learning endeavors. To begin, review the standard development and deployment flow publicly available in the TI Deep Learning repository here. The figure below gives an overall diagram of how machine learning applications were developed by leveraging of mix of in-house and open-source tools/frameworks.

To develop these TinyML products, there is a large overlap needed from otherwise disjointed domains in data science and embedded systems engineering. Therefore, I will only be addressing key points in this post but more information for the curious reader could be found in the official TI documentation here if needed.

The beginning to any machine learning project begins with acquiring the proper data to develop training, validation and test sets. In the best of cases, there exists publicly available datasets such as Microsofts Common Objects in Context, COCO for short, that provide thousands of images of which a specific use case class could be distilled from. On the other hand, there could be no such data available at all to which it is then required to build datasets from scratch or augment an existing dataset in unique ways to meet the necessary project parameters. From here, we begin to decide on key details of the modeling such as what frameworks would perform best and how much quantization is needed when considering the limits of the compute environment on which this model will run on. Once this is decide, further refinement options are examined to tune accuracy and performance to operate on the platform. Lastly, deployment is typically done through a controlled software repository that would allow the management of specific build and environment parameters in order to keep development and overall performance stable in production settings.

Figure 5: Real-time object detection development and deployment workflow

This process is the general roadmap of most machine learning projects that one may encounter however each specific use case will always have its’ own unique set of challenges and variables to overcome.

For example, the AM6XA product family, as is the case with any embedded system, did not have the luxury of near-infinite elastic cloud compute that are typically coupled with the execution needs of many frameworks today such as Tensorflow. For these reasons, efficient scaled down alternatives were leveraged such as TFlite and ONNX. Furthermore, proprietary hardware accelerators were made and leverage to further optimize these frameworks to the device. The convergence of all these layers of hardware and software allow for the ability for them to be managed in such a fashion as to enable object detection on a device platform smaller than a typical smartphone.

The exact implementation of the software vary however to illustrate a clear example of the application flow of an object detection application, I have included the figure below for reference. Note that a mixture of Python and C++ are used to benefit from the speed of C++ libraries but also retain the ease of readability/maintenance brought by Python.

Figure 6 : AM6XA Python/CPP apps Application Flow

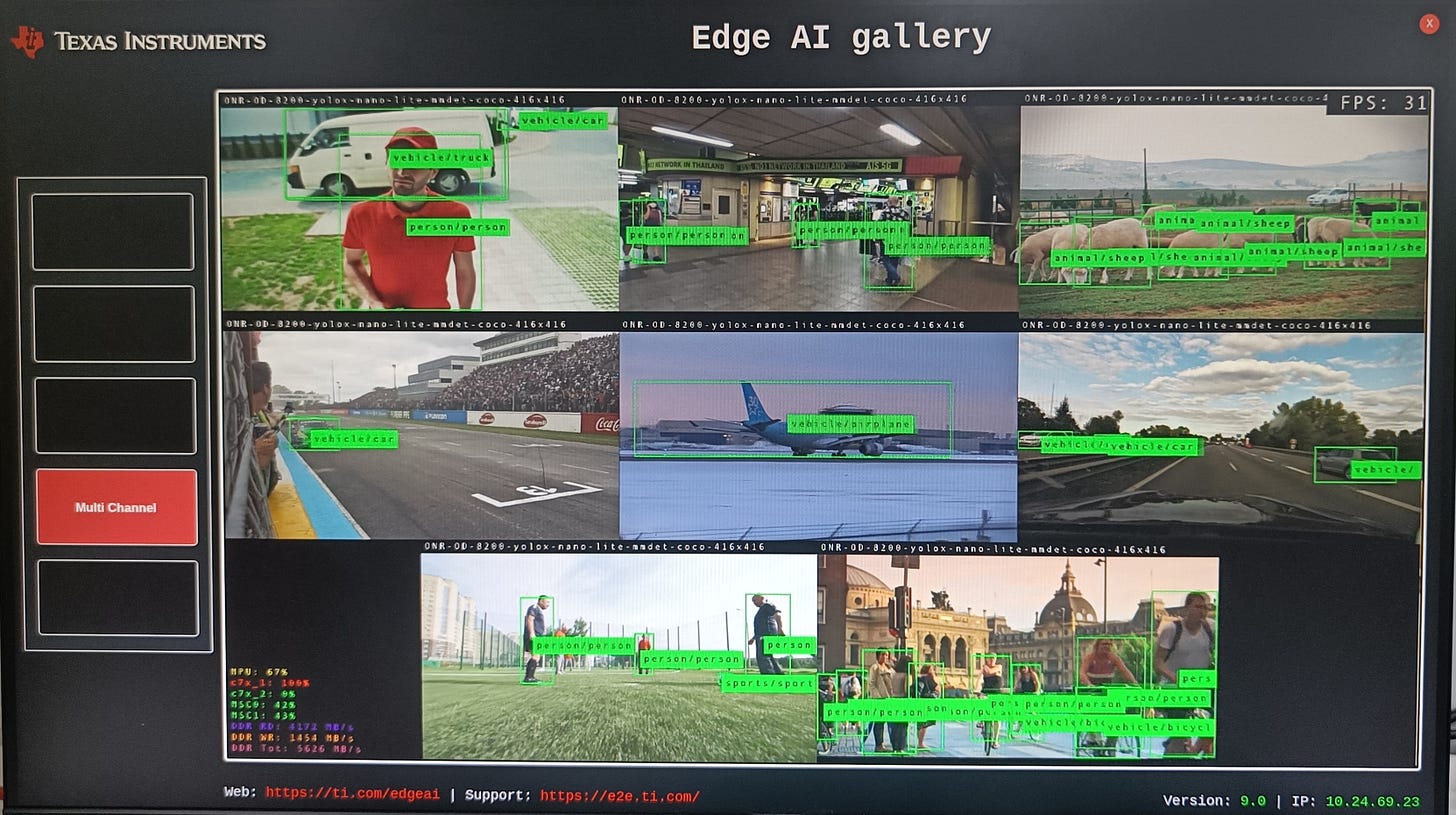

Once the foundation of working object detection application pipeline is established, the application becomes easily malleable to different use cases as shown in the many examples of the Edge AI gallery below. In the same fashion that one trains and tunes an application for detecting vehicles, it could be tuned for people, animals and foods as long as there is a source of quality data to source insight from.

Figure 7 : Edge AI Demo Gallery

It is here where I stumbled upon a common issue that plagues machine learning endeavors in industry. Many times there is no quality data available and when there is none then one must collect it themselves. Thus, many engineering hours become consumed just in the initial effort of acquiring the necessary data because it must be collected, filtered and labeled manually in order for it to be of value as a source of a truth to train a model.

For the retail shopping cart demo, many hours were used on a limited items found at the grocery. Even with just developing a proof-of-concept for common everyday items that have not differentiated with time such as fruits and Pringle cans; many hours were still needed to collect/augment/filter the hundreds of images needed to attain reliable results. If this approach were to be scaled for every single item, or even half that, at a retail store — there would not be enough resources on a team to do so.

Thus when beginning to develop a license plate recognition system I began to brainstorm how to best navigate this conundrum and explore possible solutions that are being explored. It is here where I first encountered Roboflow and its’ dataset curation offerings.

Figure 8 : Roboflow Machine Learning Dataset Development

Through leveraging Roboflow’s infrastructure features such as automatic labeling , I was able to vastly decrease the development needed to create a labeled dataset that would ultimately be used to train the machine learning model that could be deployed to production. Thus removing a large hurdle from the initial ramp-up of a project and focusing more on how to best tune the model performance. Automatic labeling is enabled by the use of Grounding DINO. Grounding DINO is a zero-shot object detection model made by combining a Transformer-based DINO detector and grounded pre-training. According to the Grounding DINO paper abstract, the model achieves "a 52.5 AP on the COCO detection zero-shot transfer benchmark".

What this allows is for many (in my case more than 97% of my input images) to be labelled correctly with proper bounding boxes that they could then be used for training purposes — all while still maintaining acceptable performance metrics while requiring orders of magnitude less of manual data collection work.

Figure 9 : Automatic Labeling of License Plates with Grounding DINOBy assuaging this choke point in the machine learning development cycle, I foresee a future where this approach will become more popular as companies look to better find avenues for efficiencies in their teams and also while start-ups look to adopt newer technologies to best optimize for smaller engineering teams. Automatic labeling does bring a unique set of issues however as models will naturally only be as accurate as the labeling system used to curate its’ training data. However, in a technology landscape where time-to-market is king, where this path exceeds in expectations is its’ speedy ability to generate a proof-of-concept quickly to secure a contract or entice customers of future offerings once the initial response from the public has been measured.

I am looking forward to how these platforms continue to evolve and how engineers will best leverage them for their purposes in industry. It was a thrilling learning experience to be able to tackle this common problem with a new approach and I was very pleased with the final inference results as shown below.

Figure 10 : Results of License Plate Recognition System